New Initiative Aims to Address AI Safety Concerns

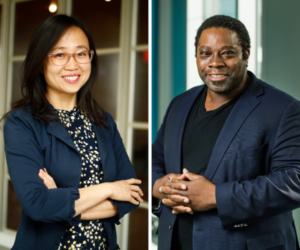

Artificial intelligence technologies continue to advance rapidly, raising critical questions about their safety and robustness. In response, renowned researcher Zico Kolter is set to tackle these pressing issues through a pioneering program initiated by former Google CEO Eric Schmidt.

The AI Safety Science program, launched by Schmidt Sciences, aims to integrate safety into the core of AI innovation by funding foundational research. With a substantial commitment of $10 million, the program seeks to foster a global research community dedicated to AI safety.

Kolter, who leads the Machine Learning Department at Carnegie Mellon University, will delve into the phenomenon of adversarial transfer. This issue arises when attacks crafted for one AI model become effective against others. His research will build on earlier findings that demonstrated how to bypass safety measures in popular AI systems like ChatGPT and Google Bard. Kolter also serves on the board of OpenAI, the developers behind ChatGPT.

“We’re thrilled to work together with Schmidt Sciences to conduct research on the fundamental scientific challenges underlying AI safety and robustness,” said Kolter.

The initiative has selected 27 projects to explore the fundamental science necessary for understanding AI safety properties. These efforts aim to develop technical methods for testing and evaluating large language models (LLMs), ensuring they are reliable and less prone to errors or misuse.

“As AI systems advance, we face the risk that they will act in ways that contradict human values and interests — but this risk is not inevitable,” stated Eric Schmidt. “With efforts like the AI Safety Science program, we can help build a future in which AI benefits us all while maintaining safeguards that protect us from harm.”

The program will also provide computational support from the Center for AI Safety and API access from OpenAI.

Read More Here